The batchGCD algorithm didn't seem to have been used to examine the keys in the database. I was in good company in spotting this possible extension.

Lots of bad SSH keys on Github, and nobody has even run a batch GCD yet. This is a big deal. https://t.co/HcEcHgES9V

— Matthew Green (@matthew_d_green) June 2, 2015

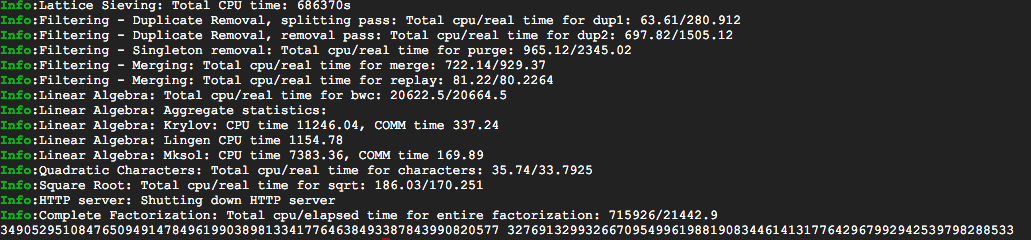

At the time I expected to see people reporting the discovery of further bad keys discovered using batchGCD over the next few days. A few days latter I came across http://cryptosense.com/batch-gcding-github-ssh-keys/ which looks like exactly that just in a more restrained writing style. In the mean time I have been having a poke around myself.

Here are some observations I have made from my experiments with the keys on github and uploading new keys.

- There is now a minimum key length of 1024 bits enforced when submitting keys with a suggestion of 2048 bits

- There are no keys below 1024 bits still in the database all shorted ones have been removed.

- You cannot add a public key from a pair generated with the debian bug. If you do you will see this message

- There are very few obviously bad keys (e.g. with trivial moduls factorisation) on github. I found a handful all very old.

- I could not find any keys with common divisors in the database. It looks like somebody has been through with batchGCD notified github and they have revoked them

- New keys added are not checked to see if they have a common divisor but presumably will eventually get discovered

- Github seems to receive a lot of spam signup these days where users are listed in the api but deleted for viewing